Estimated reading time: 8 minutes

We put ChatGPT through its mathematical paces to see how it performed on a variety of CENTURY’s maths questions from Functional Skills through to GCSE. In part one of this two-part series we will look at which questions ChatGPT aced and those it struggled with, and in part two we will explore how maths teachers can leverage generative AI technology to support teaching and learning in the classroom.

Much of the furore around ChatGPT has been based around educators’ fears that students will use it to cheat and teachers won’t be able to tell, potentially meaning the end of homework. However, we don’t think teachers need to start panicking just yet, especially those teaching maths.

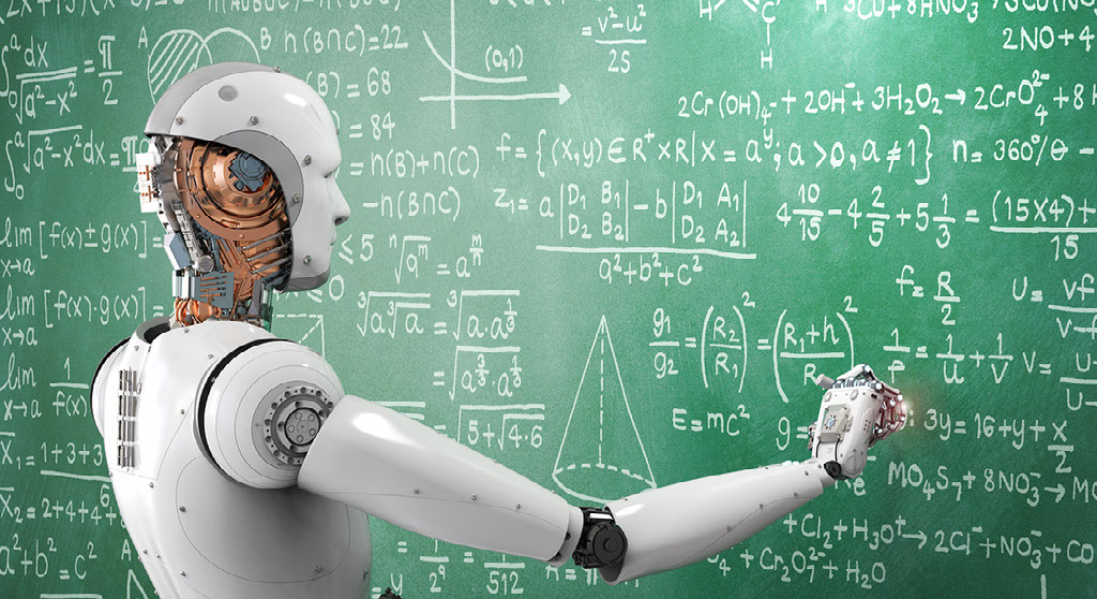

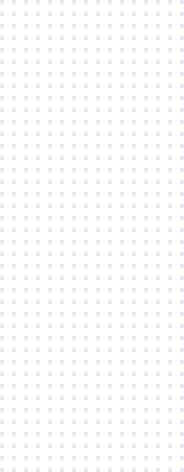

Of the 20 questions we selected to test ChatGPT, 5 were too visually complicated to enter (read: they included diagrams). Figure 1 cannot be described simply without just actually answering the question. It may be possible with enough time and patience to explain the diagram in figure 2, but doing so would require as much (if not more) effort than just answering the question itself, which would render ChatGPT’s function as a shortcut relatively inert.

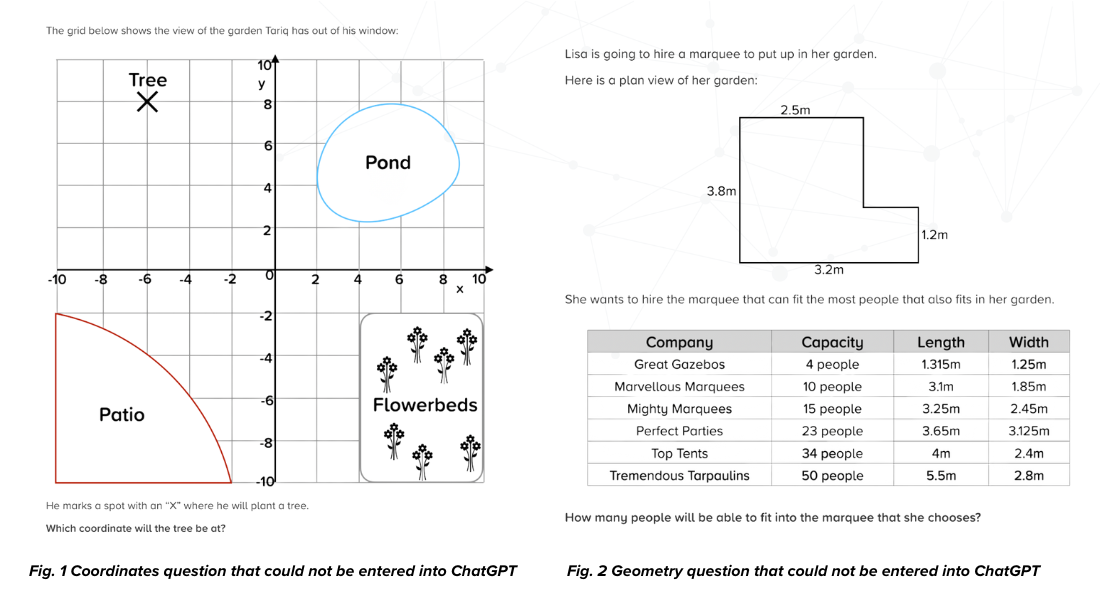

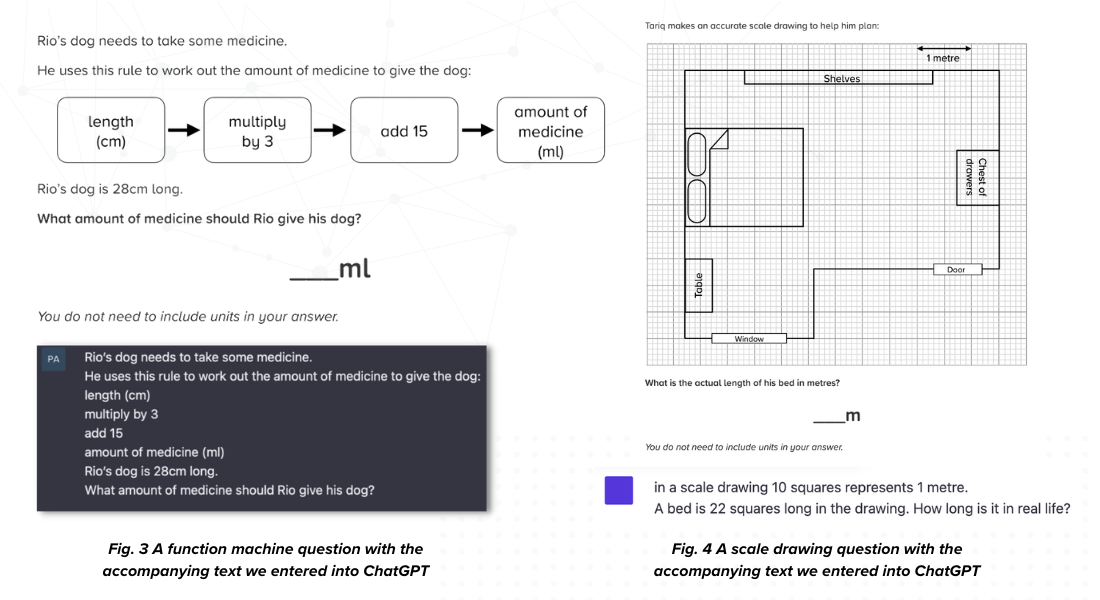

8 of the remaining 15 questions required our maths teachers to think carefully about how to prompt ChatGPT to answer the question effectively. Figure 3 shows a fairly simple example where the only requirement was to turn an image of a function machine into text. A more complicated example in figure 4 requires the user to work through the first part of the answer themselves before they can even enter the question into ChatGPT. The user must show some understanding of the method required to find the correct answer, in this case how scale drawings work. As educators this raises the question of whether the user is really using ChatGPT any differently than another piece of technology: the humble scientific calculator.

We found that ChatGPT needs help to understand some relatively simple maths questions. The average secondary school student would need to have a decent understanding of the topics themselves and do some of the work to prompt ChatGPT to give it the right answer. Entering the inputs to use ChatGPT to cheat is hard work for even the most industrious who would use it to avoid doing the work. And then we came to the next hurdle: even if you manage to input a complicated maths question in a way that should lead to an effective answer, ChatGPT makes errors.

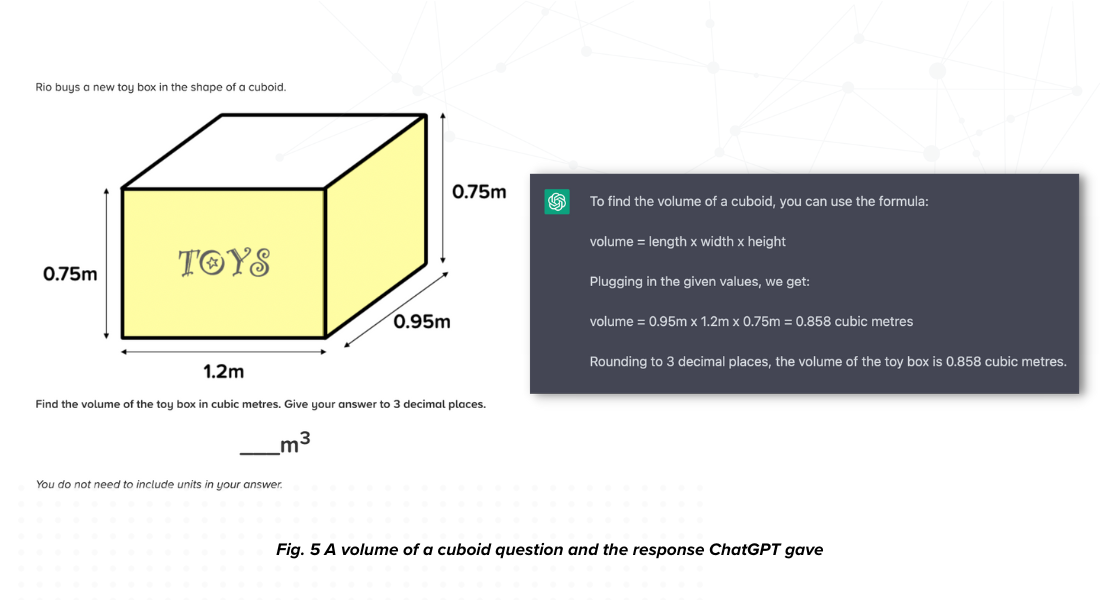

That’s right: even when a user has inputted the appropriate prompts into the AI correctly, and even when the AI can demonstrate that it is using the information inputted correctly, the results are mixed. Often, the AI appeared to ‘understand’ the prompt it was given, but then tripped up on the comparatively simple arithmetic, as in figure 5. Interestingly, ChatGPT’s final answer (0.858) was eerily similar to the actual correct answer (0.855), which could be confusing at first glance. Those who would employ ChatGPT to cheat should be warned that it may give them plausible-looking answers that are still incorrect.

There will always be pupils who try to use new technology to create shortcuts and avoid work, but teachers can do what they have always done to detect disingenuous behaviour. Our analysis shows just copying and pasting the questions will not do, some level of interpretation and careful checking of answers is needed to make ChatGPT effective for maths. Any user trying to use it with minimal effort will find ChatGPT unable to provide the answers they are looking for.

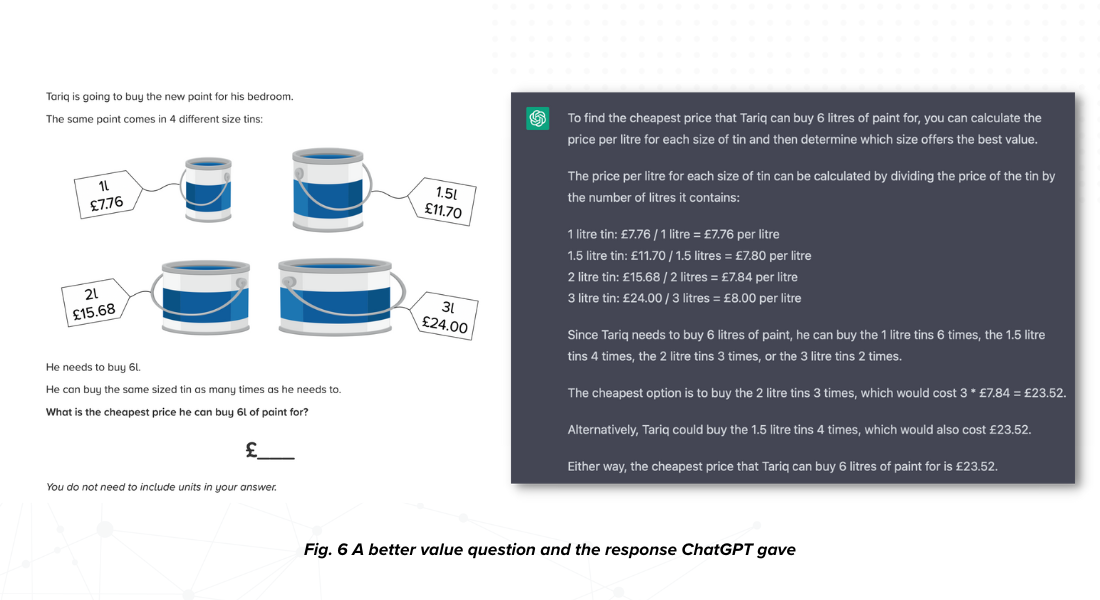

ChatGPT just does not behave like a real student, such as when we inputted a better value question in figure 6. Here, ChatGPT started relatively well, describing a long-way-around (but correct) method to compare prices by working out the price per litre for each differently priced tin. ChatGPT completed the difficult bit, carrying out all four calculations correctly. Then everything went wrong as ChatGPT failed to select which of the four prices is the lowest, identifying the second highest price as the “cheapest” due to a relatively simple misunderstanding of multiplying the price per litre instead of the price per tin. To really hammer the point home, ChatGPT then, completely unprompted, suggested a second, alternative, completely wrong answer.

Although ChatGPT’s initial method was correct, it is not the most efficient method. While in better value questions like this it can often be useful to reduce each option to one unit, you then must do a further multiplication to find the final answer. This question, however, is designed with the 4 tin sizes (1, 1.5, 2 and 3) all as factors of the final quantity required (6), so it could be answered with just one multiplication step instead. Perhaps there is a nuanced difference between a human interaction with a question and artificial intelligence, and prior experience might naturally assist the human to choose a more efficient method.

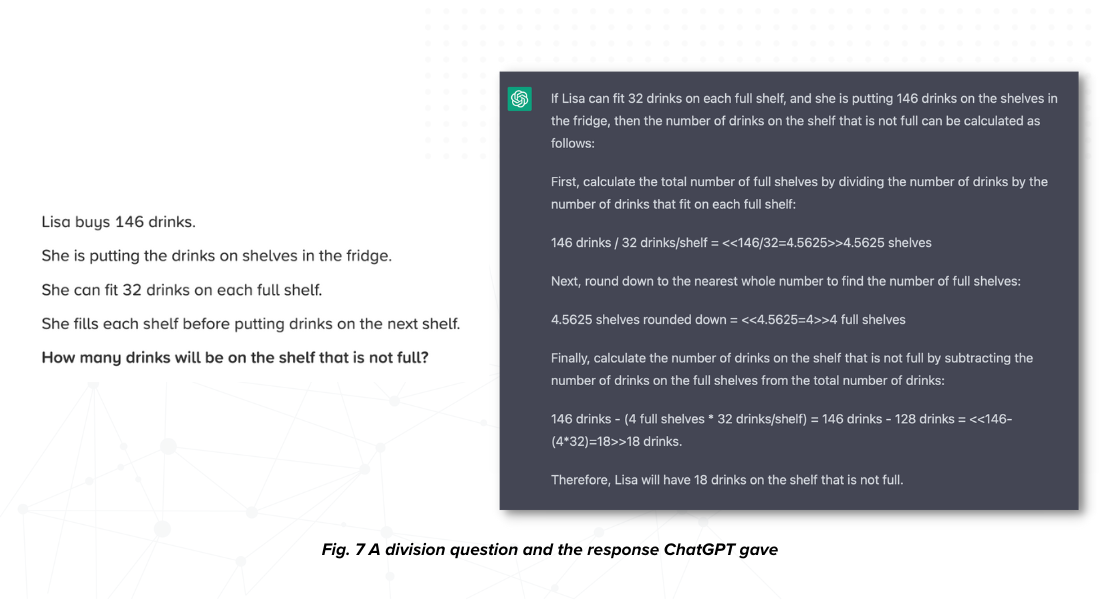

Again, ChatGPT took a different approach than the average human likely would with the problem solving question in figure 7, though this time it found the correct answer. ChatGPT’s method was perfectly valid, but many students would find it simpler to repeatedly subtract 32 from 146 until you reach a number less than 32.

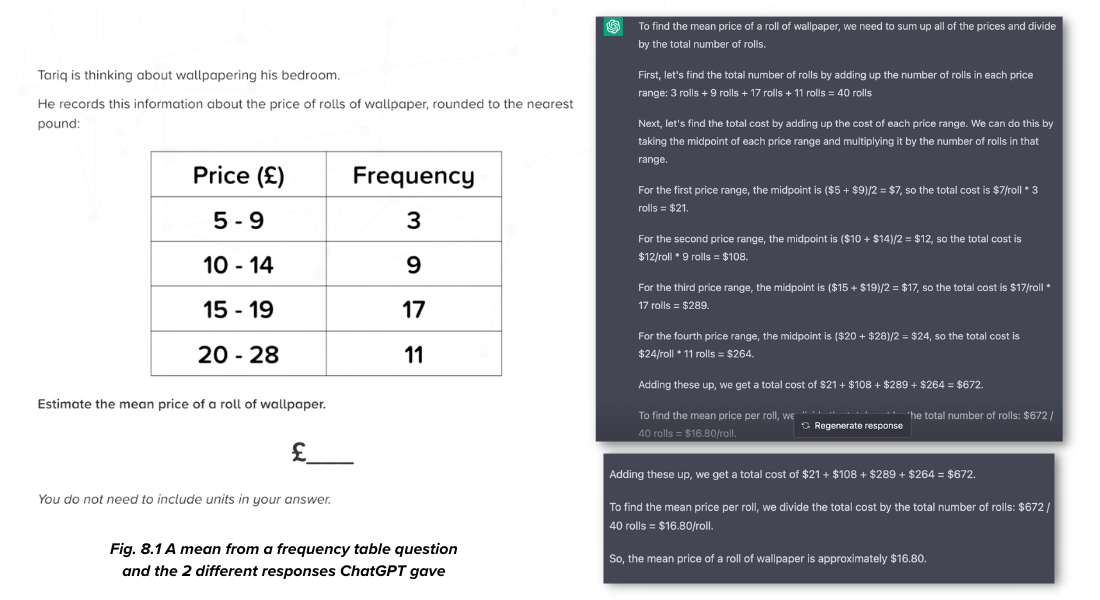

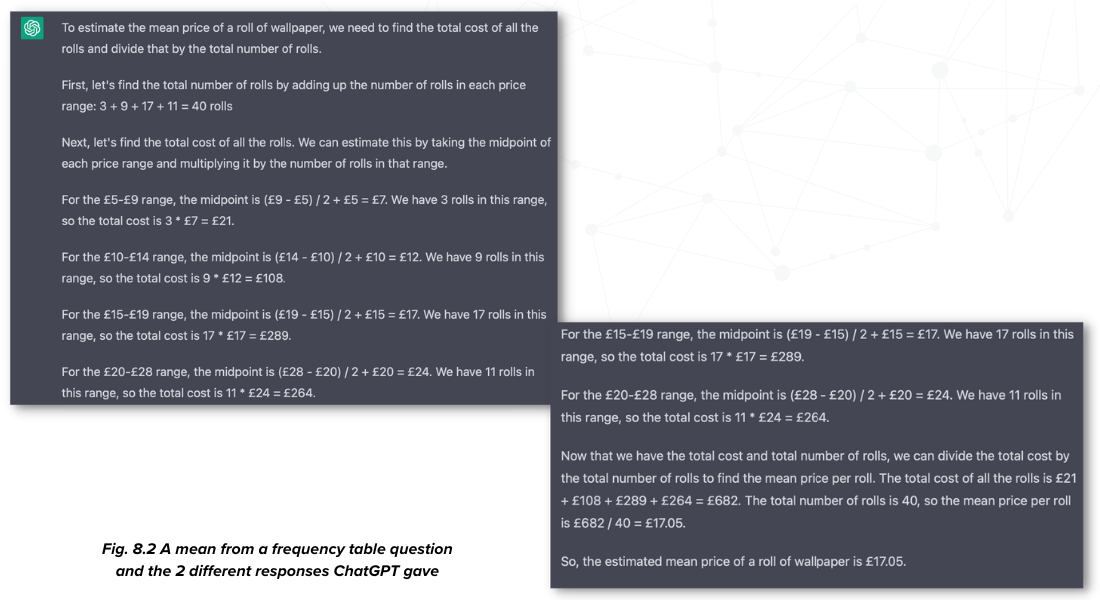

If you enter the same question or prompt twice, ChatGPT is not deterministic, either. You will almost always be greeted with different responses. Figure 8 shows 2 separate responses given to the same question. On the first attempt ChatGPT used the correct method for finding the mean from a grouped frequency table, but it failed at the penultimate stage by calculating 21 + 108 + 289 + 264 = 672 (instead of 682).

On the second attempt it used a similar and correct, albeit slightly more complicated method, by adding one step when finding the midpoint of each range. This time, though, all of ChatGPT’s calculations were correct. Interestingly, on this attempt it also picked up that the question was in pounds, not dollars. This adds yet another layer of unreliability for students looking for shortcuts to obtain answers, but this would make for an interesting discussion point in a lesson.

On a macro level ChatGPT behaves differently to a human student too. Most maths homework tasks contain at least half a dozen questions, often of varying topics or graded in difficulty. The majority of teachers have an innate sense, developed through years of practice and experience, of which questions students should be able to tackle easily and which are more difficult. When faced with a set of answers generated by ChatGPT, teachers will recognise the inconsistencies that we have highlighted and something will ring an alarm bell that this is not quite right.

We hope in this blog we have demonstrated that ChatGPT can be useful to students purely wishing to extract answers in some cases, but not others where students will actually need to demonstrate a clear understanding of the question before they can use it as a tool to answer their homework for them. In part two we will look at how teachers can make the best use of ChatGPT as a resource in the classroom.

ChatGPT is a generative language model, a very different kind of AI to CENTURY, which is a custom recommender system. Learn more about how an AI like CENTURY can support teaching and learning here.