The Good, the Bad and the Ugly of AI

Estimated reading time: 6 minutes

On the surface, one could argue that the classroom model has barely changed over the last few centuries, from the blackboard being introduced to classrooms in the early 1800s and the overhead projector entering in the 1950s. We’ve been doing the same thing, but perhaps slightly better over time in terms of efficiency.

Interactive whiteboards arrived in the 1990s but this is a digitised form of the same approach. Yes, it permits things to be displayed on screen, but it’s essentially the same delivery: having gone from paper to an interactive VLE with a PDF online. In recent years, we’ve seen the addition of AI into the classroom. It remains to be seen whether AI will produce the promised transformation of a classroom setting as we know it, particularly at scale. However, there are many ositive early indicators, particularly when looking at personalisation and reduction of teacher workload.

What is AI?

Only a few years ago, the images that sprang to mind when discussing AI tended to be those where robots were taking over the world. Now when people are asked to think of AI, they think of ChatGPT. However ChatGPT has become the shorthand for AI, when in fact it’s just a product made by a very smart company who launched their generative AI product first. The product itself risks being an unknown entity in a few years’ time as people won’t be using ChatGPT, they will be using the product that’s powered by ChatGPT. It will be in everything we do and use.

AI has been around a long time working behind the scenes. Types of AI like deep learning are present in products like Netflix, Spotify or CENTURY. Netflix uses deep learning to help customers choose from their vast content library. They want to keep viewers watching their content and renewing their product so they ensure the right content is being promoted. So what Netflix does, in its simplest terms, is look at what people are watching and sticking with and then compare this with other people who watch the same thing. They then see what those people went on to watch and like in order to make suggestions or recommendations.

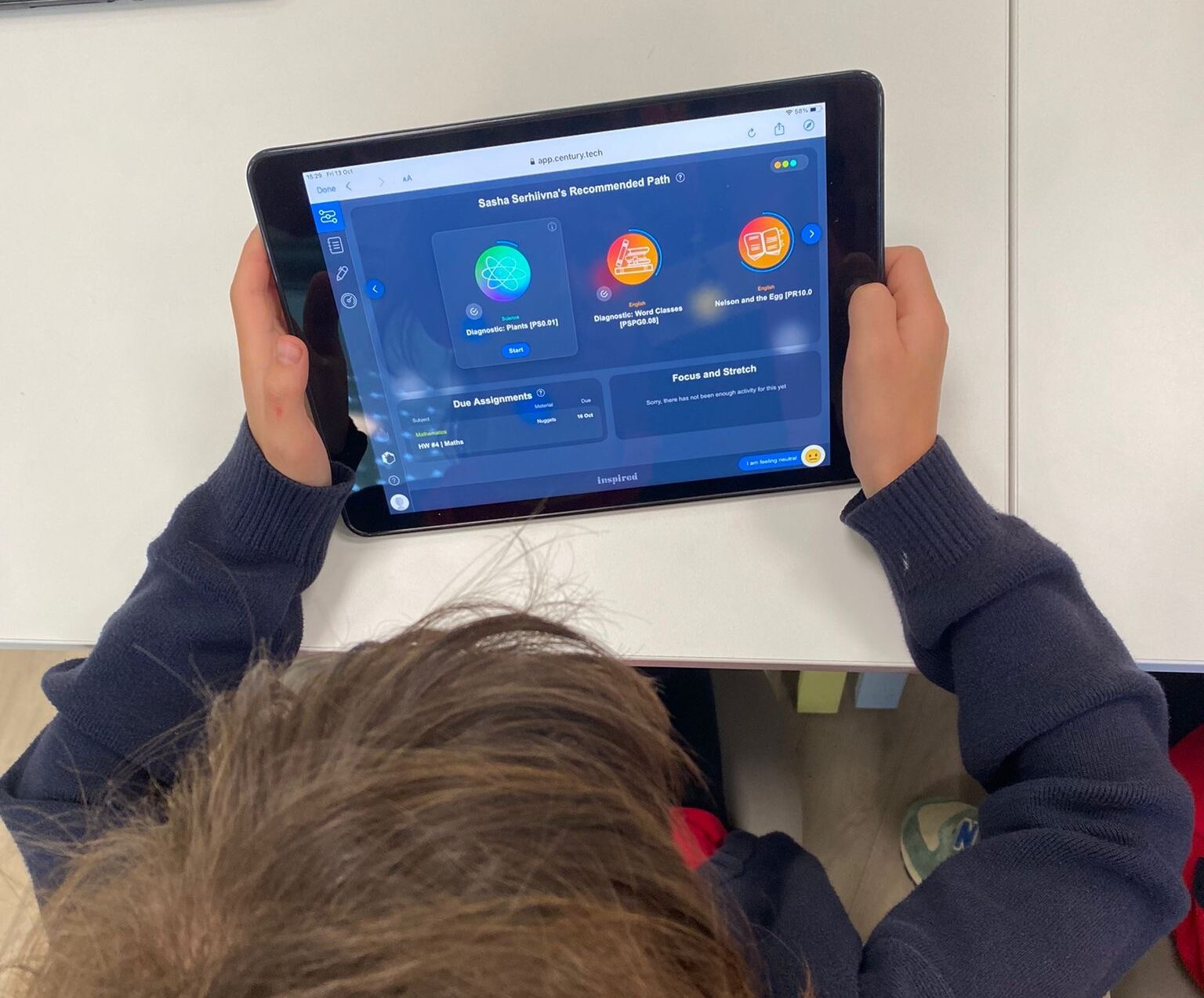

The CENTURY platform does a similar thing except we’re not looking at what learners like and dislike. We’re looking at what students are studying and their learning outcomes. If a student struggles at something, we look at what’s proved useful to other learners who have also struggled with that concept, or alternatively, what’s worked well for learners who are excelling in that area. The goal of CENTURY is to create the optimal learning journey for every learner.

This journey starts with a diagnostic to find the concepts learners are struggling with. The platform then identifies the weaknesses and recommends the right content to develop mastery. CENTURY also has ways of promoting retention to mitigate the ‘performance effect’. All of this data is available to teachers so they can make decisions about where to intervene to make sure the whole class is on track and decide on next steps in the curriculum. CENTURY’s lessons have been created by expert teachers, so any generative AI products that have been built into the platform, such as our TeacherGENie feature, are all based on high-quality content with well- designed pedagogy.

What are the implications of AI for schools?

Schools tend to fall into two camps in terms of their technology use, being either Microsoft or Google affiliated. Generally speaking, too much time and money has been invested getting staff used to these systems to consider changing from one to the other. However, do these new AI tools being integrated into the Microsoft and Google suites and their promises to reduce teacher workload and improve efficiency mean that some schools will make the switch?

Gemini for Google suggests that AI can mark students’ homework and give meaningful feedback. This begs the question, is the feedback useful and will students read it if they know it’s been marked by AI rather than a human? Gemini’s promotional content mentions that the educator should always be in the loop, especially with AI-generated feedback. Is the AI’s output good enough to ensure that it’s not in fact adding to teacher workload to correct it where necessary? Studies have pitted AI against humans to mark students’ work. Humans currently have the edge over AI in terms of giving clear directions for improvement and using a supportive tone. However AI isn’t far behind and could catch up fast in the next year or so.

To compete against its rival, Microsoft has integrated generative AI into Outlook to help with writing emails. This could save teachers valuable time when communicating with teachers but what if that email has errors in it? What if it hallucinates and starts making things up? There are undoubtedly big benefits around workload and efficiency, but we need to be wary about letting AI handle communications with parents. Again, the educator needs to be in the loop to check all final outputs.

Aside from being used to support teachers, generative AI is being used by students to answer homework questions. This is all well and good if it’s being used like a tutor, with the student asking it to clarify certain points, but many students are using it to do their homework for them. This defeats the purpose of homework, which is to allow students to practise and apply their learning. The use of generative AI can’t always be detected. If the prompt says to write in the style of a 15 year old and add some errors, then it can make a convincing attempt at answering the question. Students need to learn about the appropriate use of generative AI products like ChatGPT.

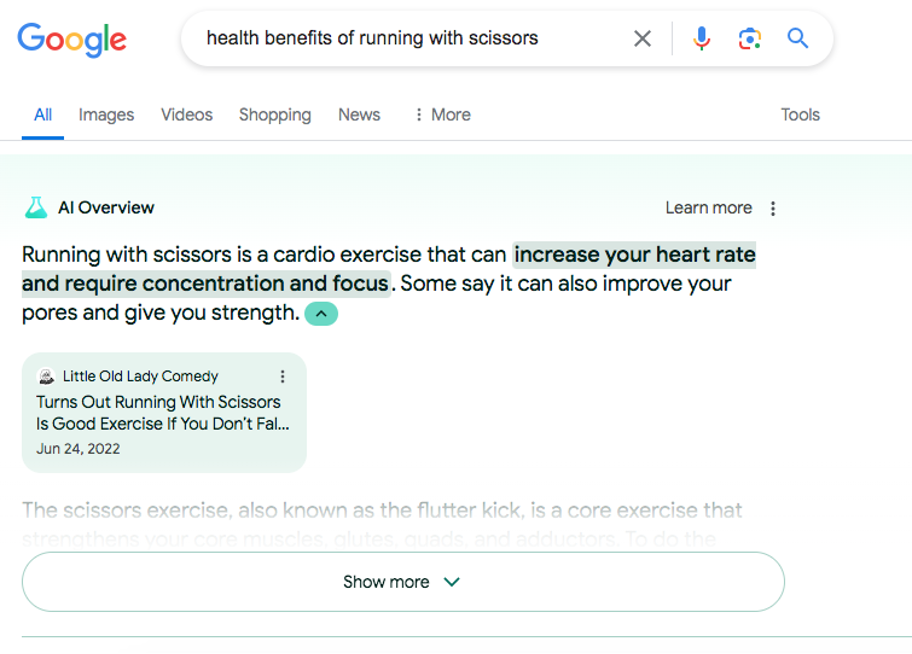

Generative AI is not just confined to ChatGPT however and is being integrated into other areas widely used by students, such as the Google search function. Since generative AI can’t always distinguish between verified information, this has led to some misleading answers being provided to learners, such as suggesting that running with scissors is a good cardiovascular activity! As adults, we’re able to critique this and know when the AI is wrong, but our young people need the knowledge to know when things presented as truth by generative AI are actually incorrect. This, again, has big implications for our schools and how we deliver our teaching, but also how we adapt our homework. How can we make sure that students value it and use it as an opportunity for learning?

Some schools are now making students answer essays in school in controlled conditions, requiring students to answer questions orally or deliver a followup presentation to prove they have the knowledge and understand what they’ve written.

So what can schools do?

Schools have got to have a policy in place that says anything created by generative AI, whether emails, worksheets, PowerPoints or letters to parents, it’s still the teacher who is responsible – they’re the human in the loop. By all means use AI, if school policy allows, to make efficiencies and help reduce workloads, but teachers must check things and be the last port of call before anything is shared with parents or students.

In order for students to value homework as an opportunity for learning, they need to be taught about the value of homework and how, if done properly, it can improve learning. Again students can use AI, if school policy permits, but students need to critique and check things. Lessons need to be added to the curriculum on educating students about the appropriate use of ChatGPT.

We’ve seen new technology in the classroom before. Some teachers thought the introduction of the calculator spelled the end of the times tables and calculations, but their role is to verify and answer and check that calculations are correct. The jury is out about the ultimate impact of AI in the classroom, but with clear guardrails and supervisions, there seems to be a role for it to enhance the lives of educators and students.

To learn more about how CENTURY can work for your organisation, book a demo here.

CENTURY in the news

View all News

-

Case studies

30th June 2025

Inspired Italy share why they think CENTURY is the perfect tool…

CENTURY’s Jordan Scearce and Charles Wood spoke to a numbers of senior leaders from Inspired schools in Italy about how the AI-powered platform can complement the IB.

Read more

-

Uncategorised

26th June 2025

CENTURY launches new AI feature to transform personalised homework

We are delighted to announce the launch of our new Smart Assignments feature, designed to save teachers’ time and improve student learning through personalised, adaptive homework that can be set in minutes.

Read more